InfluxDB Tops Cassandra in Time Series Data & Metrics Benchmark

By

Chris Churilo /

Product, Use Cases

Oct 20, 2022

Navigate to:

This blog post has been updated on October 20, 2022 with the latest benchmark results for InfluxDB 1.8.10 and Cassandra v4.0.5. To provide you with the latest findings, this blog is regularly updated with the latest benchmark figures.

At InfluxData, one of the common questions we regularly get asked by developers and architects alike is: “How does InfluxDB compare to Cassandra for time series workloads?” This question might be prompted for a few reasons. First, if they’re starting a brand new project and doing the due diligence of evaluating a few solutions head-to-head, it can be helpful in creating their comparison grid. Second, they might already be using Cassandra for ingesting data in an existing application, but would like to now see how they can integrate metrics collection into their system and believe there might be a better solution than Cassandra for this task.

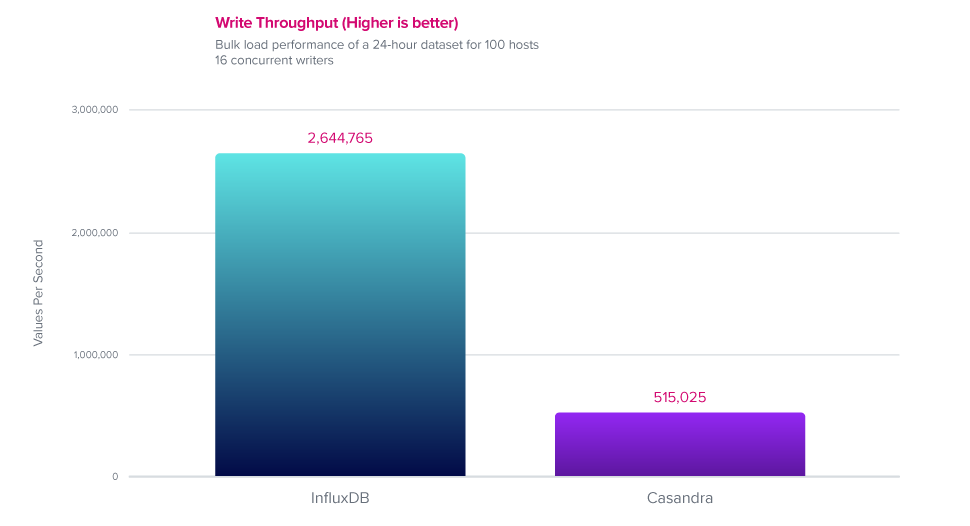

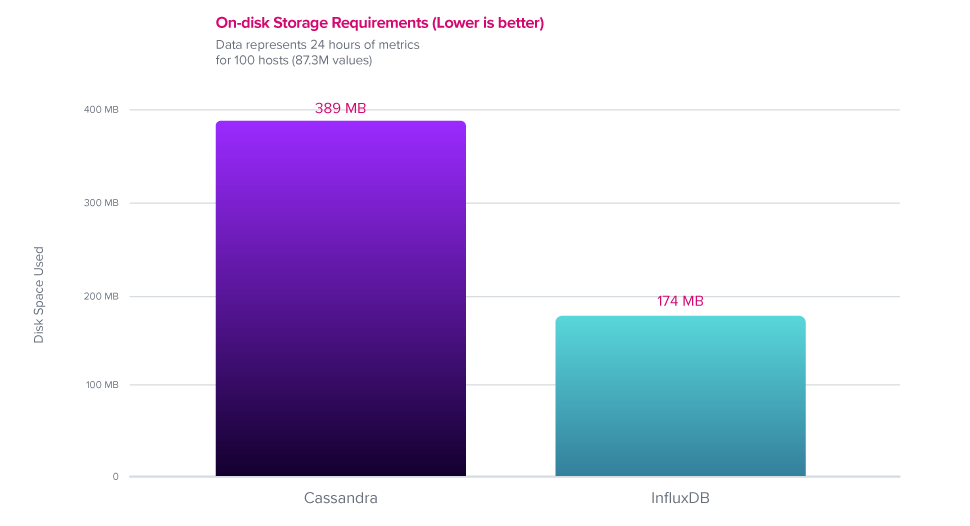

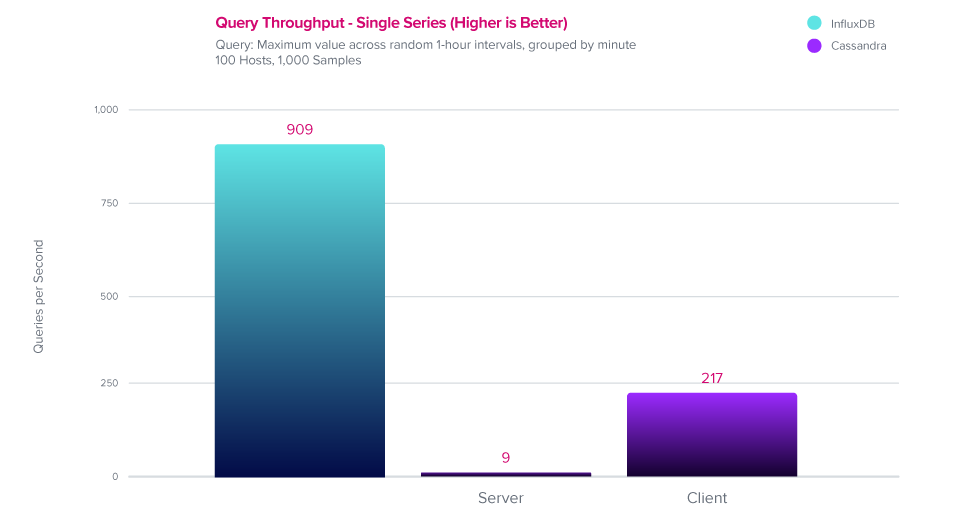

Over the last few weeks, we set out to compare the performance and features of InfluxDB and Cassandra for common time series workloads, specifically looking at the rates of data ingestion, on-disk data compression, and query performance. InfluxDB outperformed Cassandra in all three tests with 5x greater write throughput, while using 2.4x less disk space, and delivering up to 100x faster response times for tested queries.

Before we dig into the details of the benchmarks, it’s important to point out that doing a head-to-head comparison of InfluxDB and Cassandra for time series workloads was impossible without first writing a significant amount of application code to make up for Cassandra’s lacking functionality. Effectively, you’d need to rewrite portions of InfluxDB in your own application. In order to put these benchmarks together, we had to write some basic versions of these features, but a production application would take significantly more effort.

To read the complete details of the benchmarks and methodology, download the Benchmarking InfluxDB vs. Cassandra for Time Series Data, Metrics & Management technical paper or watch the recorded webinar.

Our overriding goal was to create a consistent, up-to-date comparison that reflects the latest developments in both InfluxDB and Cassandra with later coverage of other databases and time series solutions. We will periodically re-run these benchmarks and update our detailed technical paper with our findings. All of the code for these benchmarks are available on Github. Feel free to open up issues or pull requests on that repository if you have any questions, comments, or suggestions.

Now, let’s take a look at the results

Versions tested

InfluxDB v1.8.10

InfluxDB is an open source time series database written in Go. At its core is a custom-built storage engine called the Time-Structured Merge (TSM) Tree, which is optimized for time series data. Controlled by a custom SQL-like query language named InfluxQL, InfluxDB provides out-of-the-box support for mathematical and statistical functions across time ranges and is perfect for custom monitoring and metrics collection, real-time analytics, plus IoT and sensor data workloads.

Cassandra v4.0.5

Cassandra is a distributed, non-relational database written in Java, originally built at Facebook and open-sourced in 2008. It officially became part of the Apache Foundation in 2010. It is a general-purpose platform that provides a partitioned row store, which offers features of both key-value and column-oriented data stores.

Though it provides excellent tools for building a scalable, distributed database, Cassandra lacks most key features of a Time Series Database. Thus, a common pattern is to build application logic on top of Cassandra to handle the missing functionality. This is what we’ll be doing to help make a fair assessment of Cassandra for time series workloads.

About the benchmarks

In building a representative benchmark suite, we identified the most commonly evaluated characteristics for working with time series data. We looked at performance across three vectors:

- Data ingest performance – measured in values per second

- On-disk storage requirements – measured in bytes

- Mean query response time – measured in milliseconds

About the dataset

For this benchmark, we focused on a dataset that models a common DevOps monitoring and metrics use case, where a fleet of servers are periodically reporting system and application metrics at a regular time interval. We sampled 100 values across 9 subsystems (CPU, memory, disk, disk I/O, kernel, network, Redis, PostgreSQL, and Nginx) every 10 seconds. For the key comparisons, we looked at a dataset that represents 100 servers over a 24-hour period, which represents a relatively modest deployment.

- Number of servers: 100

- Values measured per server: 100

- Measurement interval: 10s

- Dataset duration(s): 24h

- Total values in dataset: 87,264,000 per day

This is only a subset of the entire benchmark suite, but it’s a representative example. If you’re interested in additional detail, you can read more about the testing methodology on GitHub.

Write performance

InfluxDB outperformed Cassandra by 5x when it came to data ingestion.

On-disk compression

InfluxDB outperformed Cassandra by delivering 2.4x better compression.

Query performance

InfluxDB outperformed Cassandra by delivering up to 100x better query performance.

Summary

Ultimately, many of you were probably not surprised that a purpose-built time series database designed to handle metrics would significantly outperform a search database for these types of workloads. Especially glaring is that when the workloads require temporal query flexibility, as is the common characteristic of real-time analytics and sensor data systems, a purpose-built time series database like InfluxDB makes all the difference.

It’s important to emphasize that achieving optimal query performance with Cassandra requires a significant amount of additional application-level processing. This could have a huge impact in production deployments where each query places additional load on the application servers.

In conclusion, we highly encourage developers and architects to run these benchmarks for themselves to independently verify the results on their hardware and data sets of choice. However, for those looking for a valid starting point on which technology will give better time series data ingestion, compression and query performance “out-of-the-box”, InfluxDB is the clear winner across all these dimensions, especially when the data sets become larger and the system runs over a longer period of time.

What's next?

- Download the detailed technical paper: Benchmarking InfluxDB vs. Cassandra for Time Series Data, Metrics & Management.

- Download and get started with InfluxDB.

- Check out the video playback of the companion webinar.

- Download and get started with InfluxDB.

- Join the Community!