An Introduction to Stream Processing

By

Charles Mahler

Developer

Oct 13, 2023

Navigate to:

This article was originally published on The New Stack and is reposted here with permission.

In the field of big data analytics, stream processing has emerged as a crucial paradigm, reshaping how businesses interact with data. But what is stream processing and why are more businesses using it?

Stream processing is a method of continuously ingesting, analyzing and acting on data as it’s generated. Unlike traditional batch processing, where data is collected over a period of time and then processed in chunks, stream processing operates on data as it is collected, offering insights and actions within milliseconds to seconds of data arrival. The main benefits of this approach when implemented properly are:

- Real-time insights

- Operational efficiency

- Improved user experience for data analysts and end users

- Improved scalability

In this article, you will get an overview of how stream-processing systems are structured and learn about some of the most popular tools used to implement stream-processing systems.

Stream-processing architecture

The architecture of stream-processing systems can vary significantly depending on the volume of data being processed, but at a high level the core components will remain the same. Let’s look at what these components are and what their role is in a stream processing system:

- Data producers — To even get started with stream processing you need some data to process, which means you need something to create data in the first place. Some common data producers are things like IoT sensors, software applications producing metrics or user activity data, and financial data. The volume and frequency of data being produced will affect the architecture needed for other components in the stream-processing system.

- Data ingestion — The data ingestion component of a stream-processing system is critical for ensuring reliability and scalability. The data ingestion layer is what captures data from various sources and acts as a buffer for data before it is sent to be processed. A proper data ingestion layer will ensure data is collected in a fault-tolerant manner so no data is lost before processing, and also that short-term increases in data volume is prevented from overwhelming the stream-processing system.

- Data processing — This is the heart of a stream-processing system, where things like real-time analytics, data transformation, filtering or enrichment are performed. Depending on the volume of data and what needs to be done to the data, this component could be anything from a simple Python script to a distributed computing framework.

- Data storage — In many cases, once real-time analysis is done using stream processing, there will still be a need for storing the data for historical analysis or archival purposes. Common solutions are things like data warehouses, time series databases and object storage.

Popular tools for stream processing

So now that you know about the architecture of stream-processing systems, let’s look at some common tools used to implement stream processing and perform the tasks of ingesting, processing and storing data.

InfluxDB

InfluxDB is an open source, time series database, which makes it an ideal fit for many stream-processing systems that work with time series data from applications for things like Internet of Things, finance and application performance monitoring.

InfluxDB is optimized for supporting high volume write throughput and efficient querying across time ranges and aggregations like those seen for stream processing. InfluxDB is also able to use cheap object storage for persisting data, which makes it ideal for storing your stream-processing data long term for historical analysis.

Telegraf

Telegraf is an open source server agent used to collect, process and output data. Telegraf has over 300 different plugins for inputs and outputs, which allow users to easily integrate with almost any data source without having to write code.

Telegraf can be seen as a solution for gluing together the different components of your stream-processing system. Telegraf can serve as a data ingestion tool, bridging the gap between diverse data sources and the data-processing layer. By using its extensive library of input and output plugins, Telegraf can capture data from different communication protocols like HTTP, applications, databases or IoT devices in real time. Telegraf also has plugins for data processing, so for some workloads it can also fill the role of the data-processing layer and do basic analysis on data as it is collected.

Kafka

Apache Kafka is a distributed event-streaming platform optimized for building real-time data pipelines and streaming applications. In a stream-processing system, Kafka acts as both a message broker and a storage system, ensuring high-throughput and fault-tolerant data streaming between producers and consumers.

Producers push data into Kafka topics, while consumers pull this data for processing. With its built-in stream-processing capabilities, Kafka allows for real-time data transformation, aggregation and enrichment directly within the platform using Kafka Streams. Its ability to handle massive volumes of events makes it a go-to solution for real-time analytics, monitoring and event-driven architectures.

Amazon Kinesis

AWS Kinesis is a suite of tools specifically designed to handle real-time streaming data on the AWS platform. Within a stream-processing architecture, Kinesis serves as a data ingestion and processing conduit. Kinesis Data Streams can capture gigabytes of data per second from hundreds of sources, such as logs, social media feeds or IoT telemetry.

Once ingested, this data can be immediately processed using Kinesis Data Analytics with SQL queries or integrated with other services like AWS Lambda for custom processing logic. Kinesis Data Firehose simplifies the delivery of streaming data to destinations like Amazon S3, Amazon Redshift or Elasticsearch for further analysis or storage.

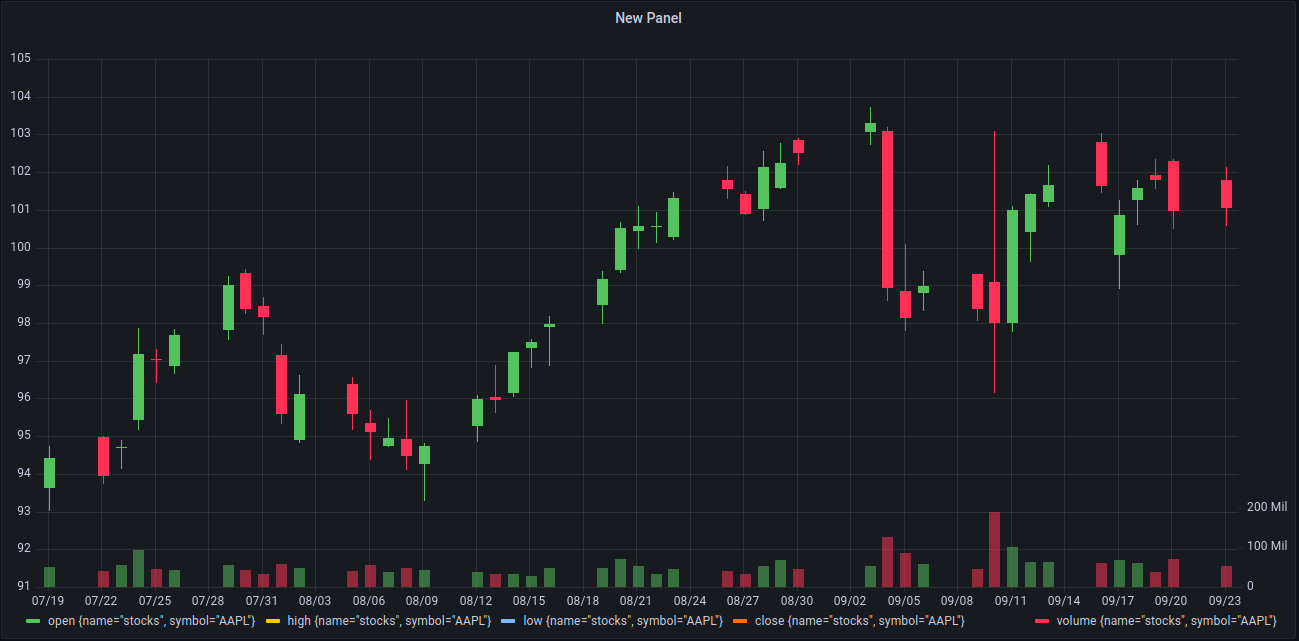

Grafana

Grafana is an open source platform for monitoring and visualization. Once data is ingested, processed and stored by the stream-processing system, Grafana can tap into these data sources, offering real-time dashboards that reflect the current state of the streaming data. By integrating with databases commonly used in streaming scenarios, such as InfluxDB, Prometheus or Kafka, Grafana provides a dynamic window into the pulse of the data stream.

Users can visualize metrics, set up alerts and overlay historical data for comparative analysis. Grafana allows users to transform raw data streams into actionable insights, enabling users to swiftly react to emerging trends or anomalies.

AWS Lambda

AWS Lambda is a serverless computing platform that allows developers to run code in response to specific events without provisioning or managing servers. In the context of stream processing, AWS Lambda can play a crucial role in handling real-time data. As data streams in through sources like Amazon Kinesis or Amazon S3, Lambda functions can be triggered to process, transform or analyze this data instantaneously.

Whether it’s for real-time analytics, data cleansing, enrichment or routing data to other services, Lambda ensures that operations are performed swiftly, scaling automatically with the volume of incoming data. This serverless approach not only streamlines the process of ingesting and reacting to data streams, but also optimizes costs, as users are billed only for the actual compute time used.

Apache Spark

Apache Spark is a distributed data-processing engine, which includes Spark Streaming, a component tailored specifically for real-time data analytics. Spark Streaming can ingest data from various sources like Kafka or Kinesis. It then divides the incoming data into micro-batches, which are processed using Spark’s distributed computing capabilities. This micro-batching approach, while not purely in real time, achieves near real-time processing with minimal latency. The processed data can be easily integrated with Spark’s batch processing, machine learning or graph-processing modules, allowing for a unified analytics approach.

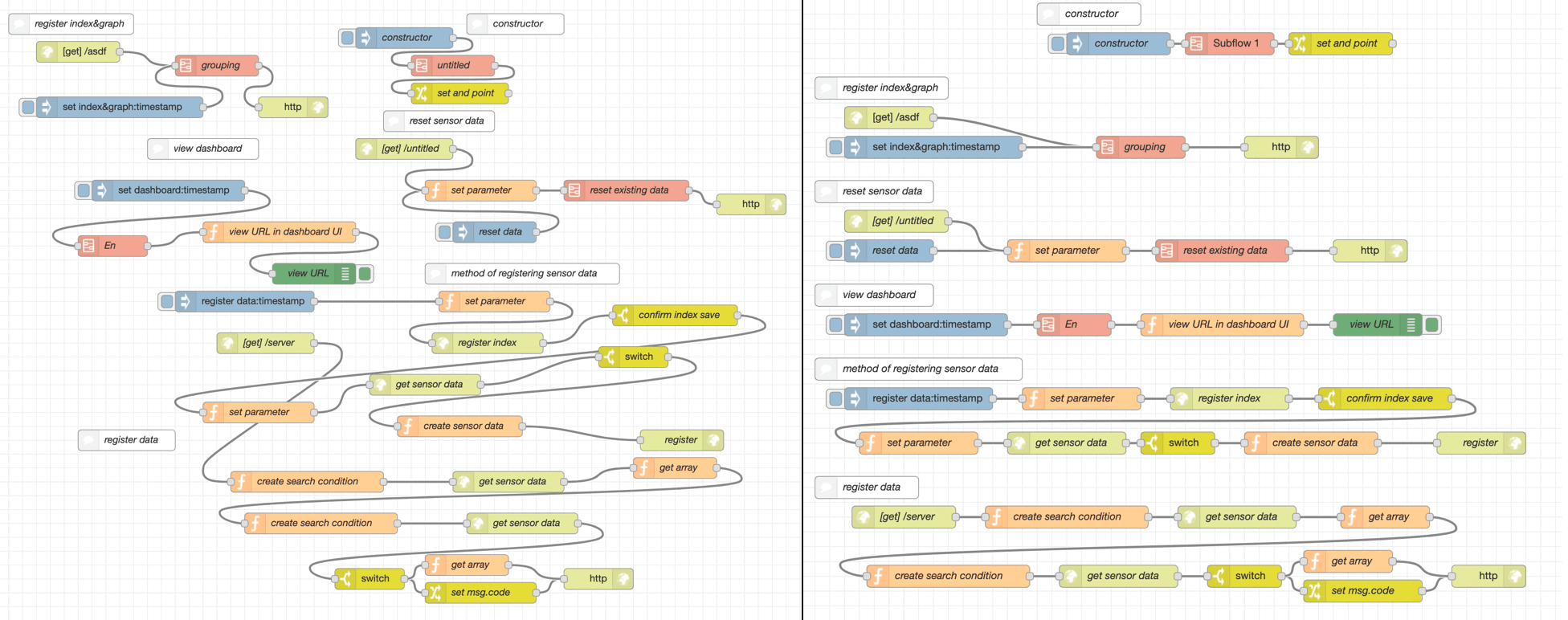

Node-RED

Node-RED is a flow-based programming tool that enables users to wire together devices, APIs and online services. As part of a stream-processing system Node-RED can act as an intuitive intermediary layer, facilitating the smooth flow of data between sources and processing endpoints. With its drag-and-drop interface, users can visually design data flows, integrate a variety of input sources, apply transformations and route the data to various stream-processing tools or databases.

Especially popular in IoT scenarios, Node-RED can collect data from sensors, devices or external APIs, process it in real time using custom logic or predefined nodes, and then forward it to platforms like Apache Kafka, MQTT brokers or time series databases for further analysis. Its flexibility and extensibility make Node-RED a great tool for rapidly prototyping and deploying stream-processing workflows without diving deep into code.

Next steps

In the rapidly evolving world of data analytics, stream processing has emerged as a critical technique for businesses and organizations to harness the potential of real-time data. The tools and frameworks we’ve explored, from Kafka’s robust data streaming to Grafana’s insightful visualizations, offer a glimpse into the landscape of solutions that facilitate real-time decision making.

However, understanding and choosing the right tools is just the beginning. The next steps involve diving deeper into the nuances of each tool, picking the right one for your specific use cases and iterating based on potentially evolving requirements in your project. Getting hands-on experience with these tools and testing out and prototyping different solutions to see which works best are common best practices used before committing to building a production stream-processing system.